Developer Experience is more than just Productivity metrics

Slide 1

Slide 2

DevEx disasters…

Slide 3

Bad DevEx Common examples Poorly documented features (or bugs)

Slide 4

Bad DevEx Common examples Poorly documented features (or bugs) Missing OpenAPI spec (or even APIs)]

Slide 5

Bad DevEx Common examples Poorly documented features (or bugs) Missing OpenAPI spec (or even APIs) PDF documentation… or accessgated

Slide 6

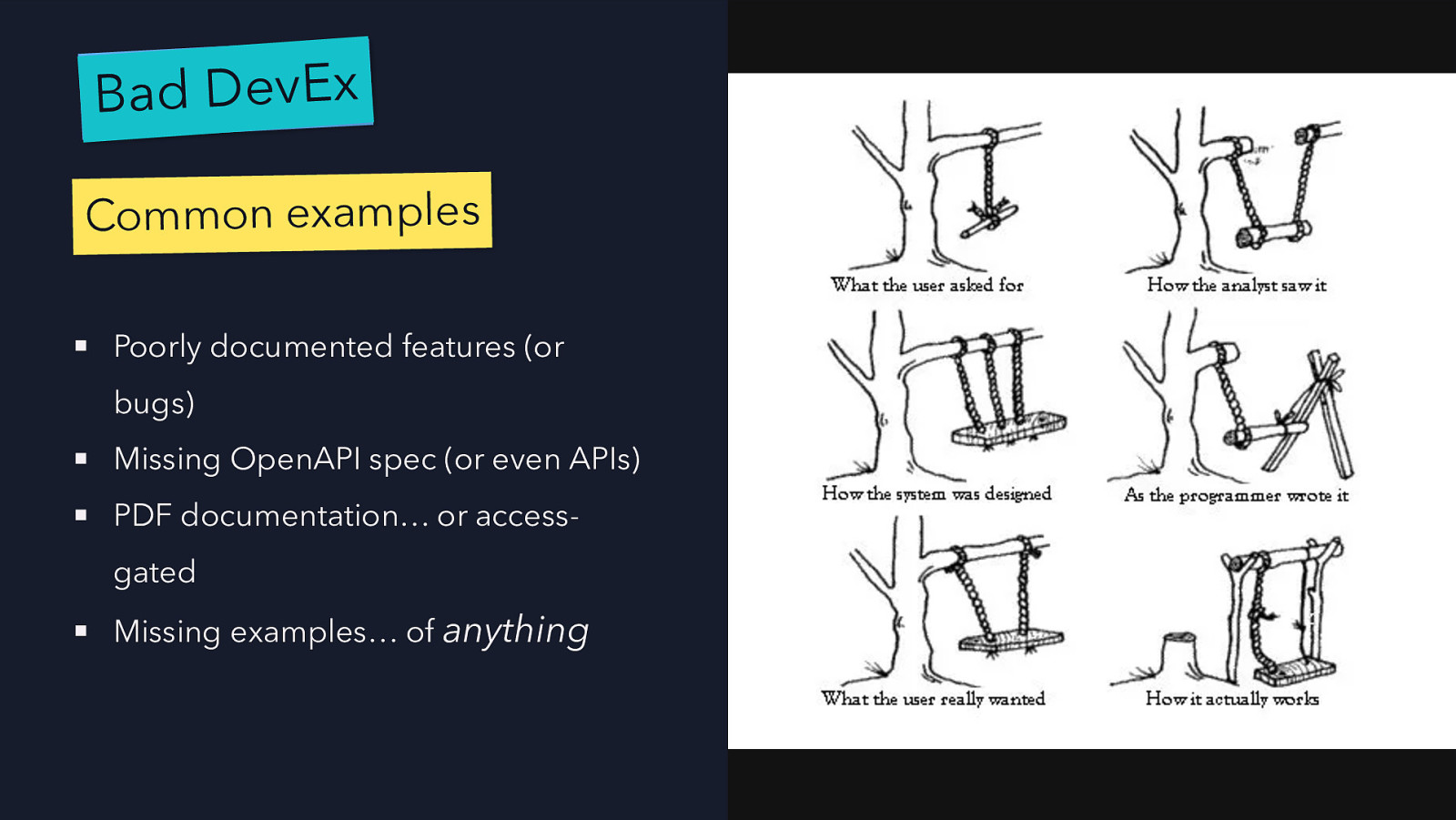

Bad DevEx Common examples Poorly documented features (or bugs) Missing OpenAPI spec (or even APIs) PDF documentation… or accessgated Missing examples… of anything

Slide 7

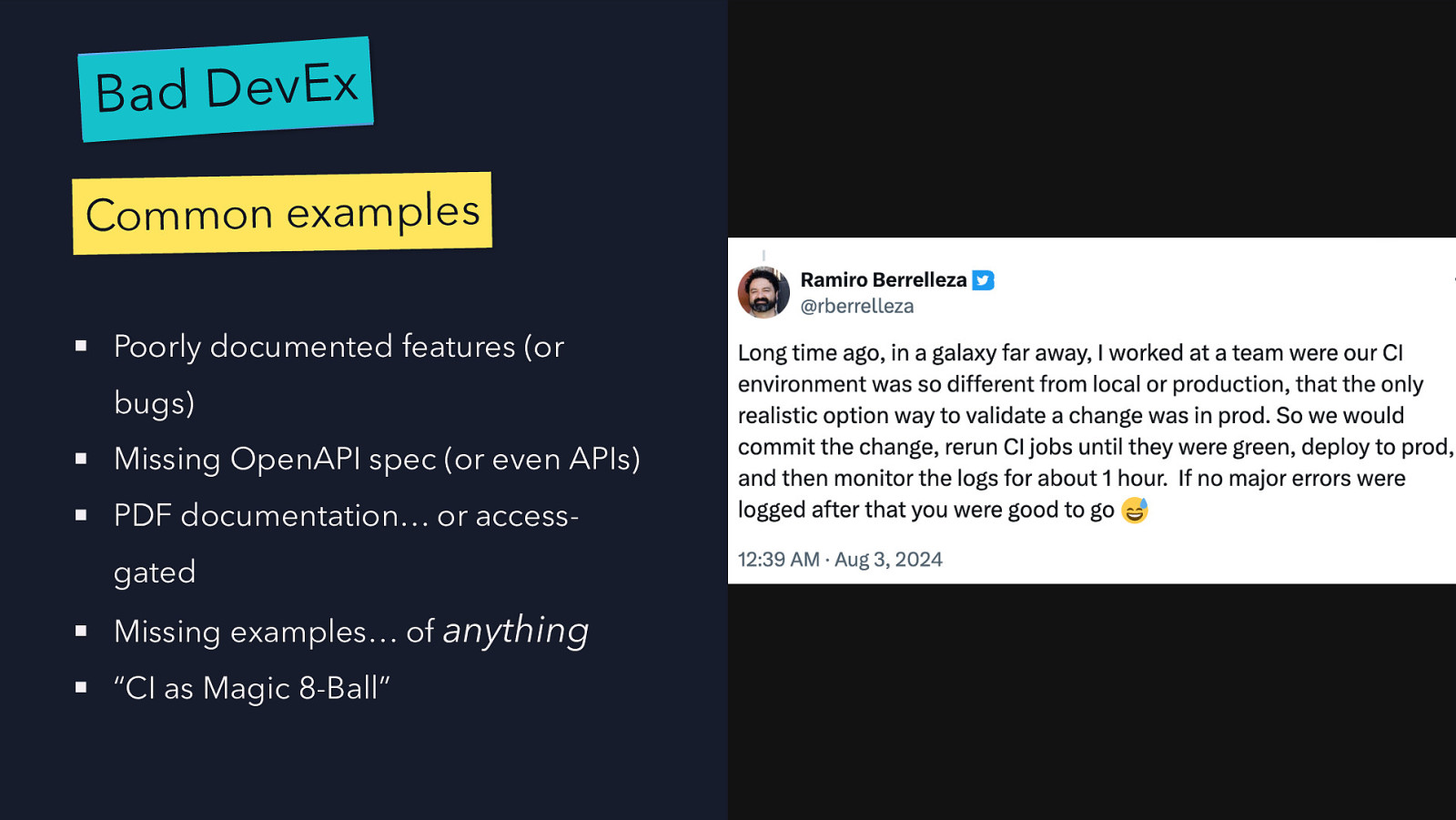

Bad DevEx Common examples Poorly documented features (or bugs) Missing OpenAPI spec (or even APIs) PDF documentation… or accessgated Missing examples… of anything “CI as Magic 8-Ball”

Slide 8

Slide 9

Slide 10

Slide 11

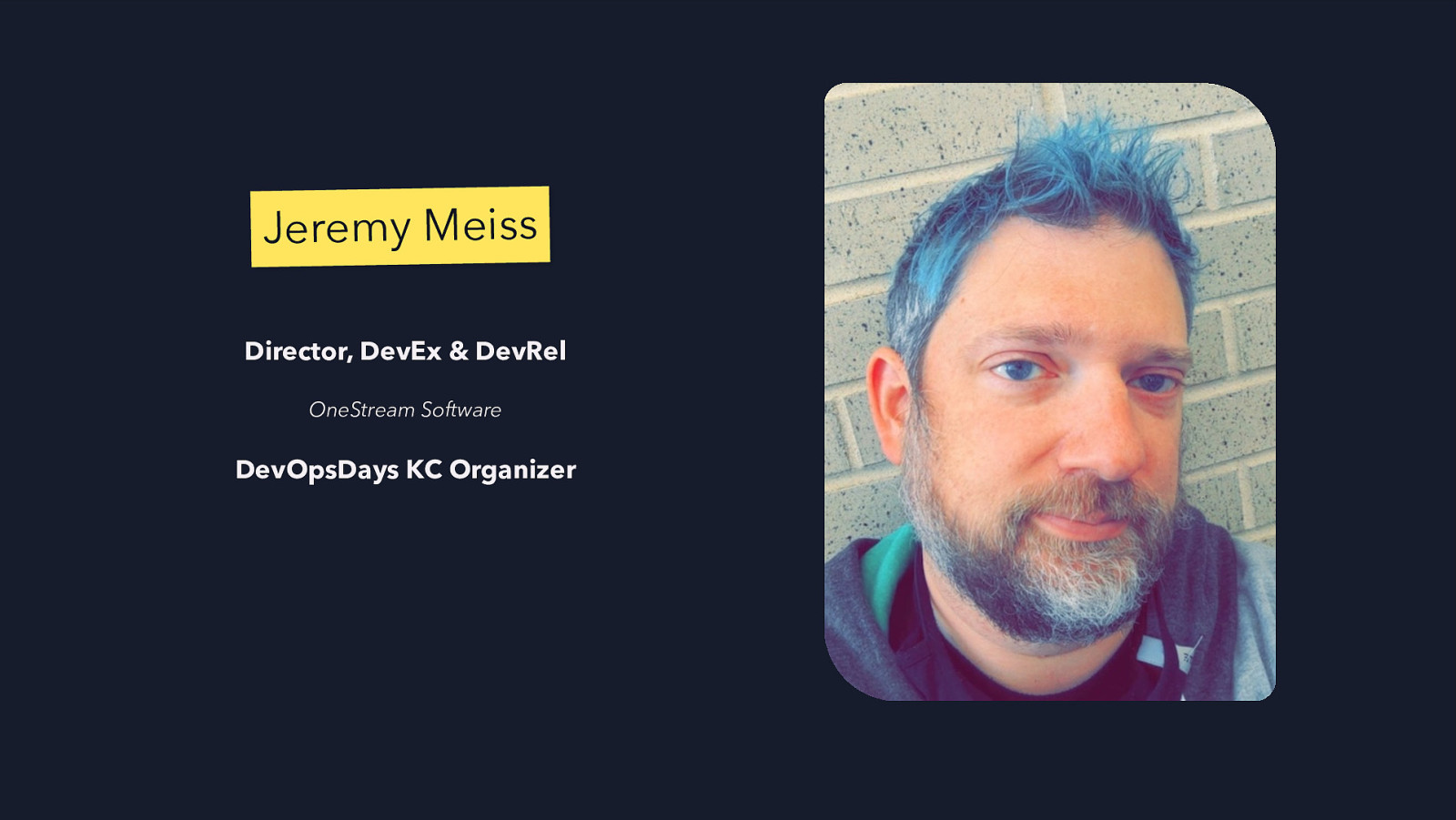

Jeremy Meiss Director, DevEx & DevRel OneStream Software DevOpsDays KC Organizer

Slide 12

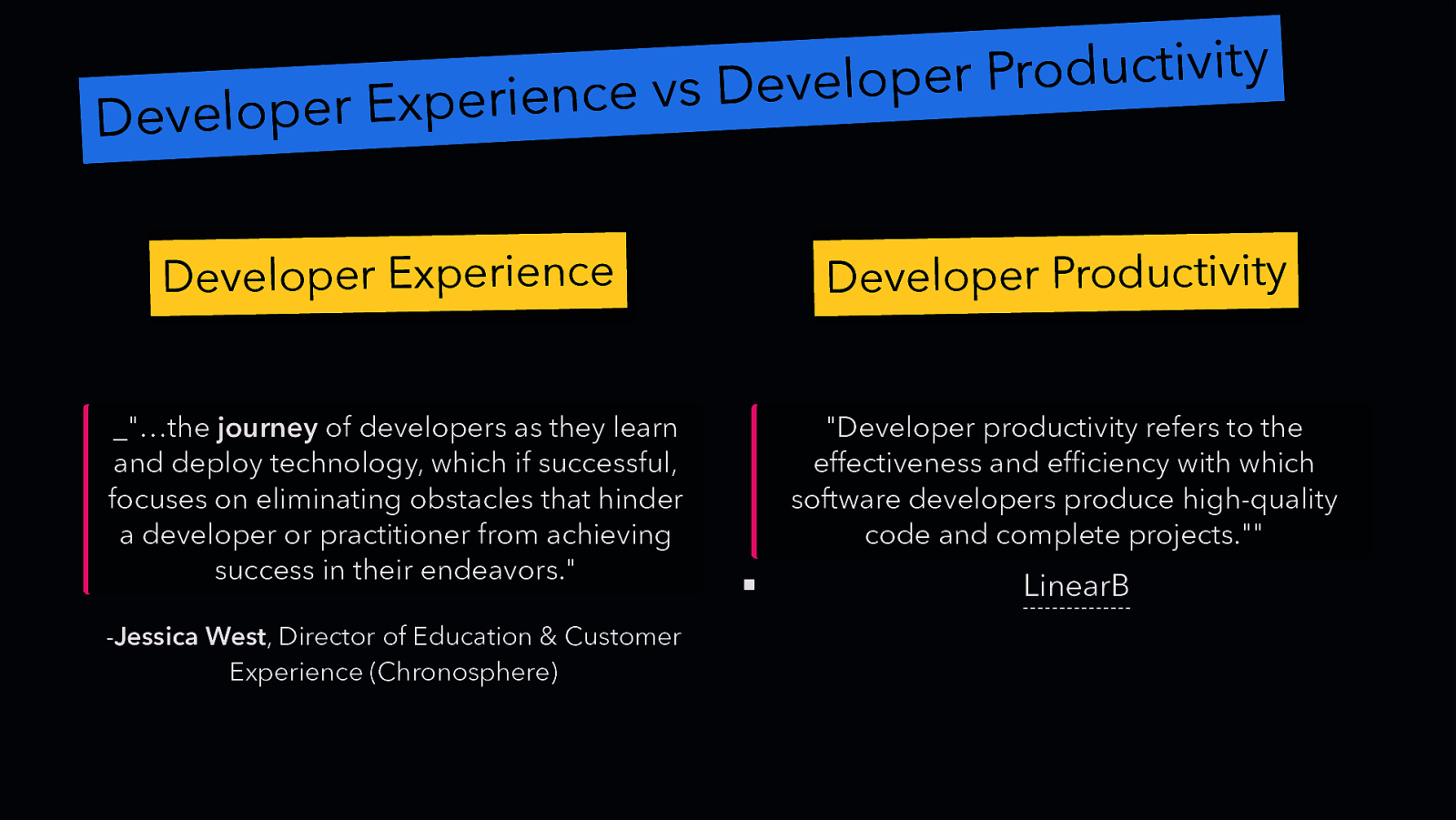

y it v ti c u d ro P r e p lo e v e e vs D c n ie r e p x E r e p lo e v e D Developer Experience Developer Productivity _”…the journey of developers as they learn and deploy technology, which if successful, focuses on eliminating obstacles that hinder a developer or practitioner from achieving success in their endeavors.” “Developer productivity refers to the effectiveness and efficiency with which software developers produce high-quality code and complete projects.”” -Jessica West, Director of Education & Customer Experience (Chronosphere) LinearB

Slide 13

Slide 14

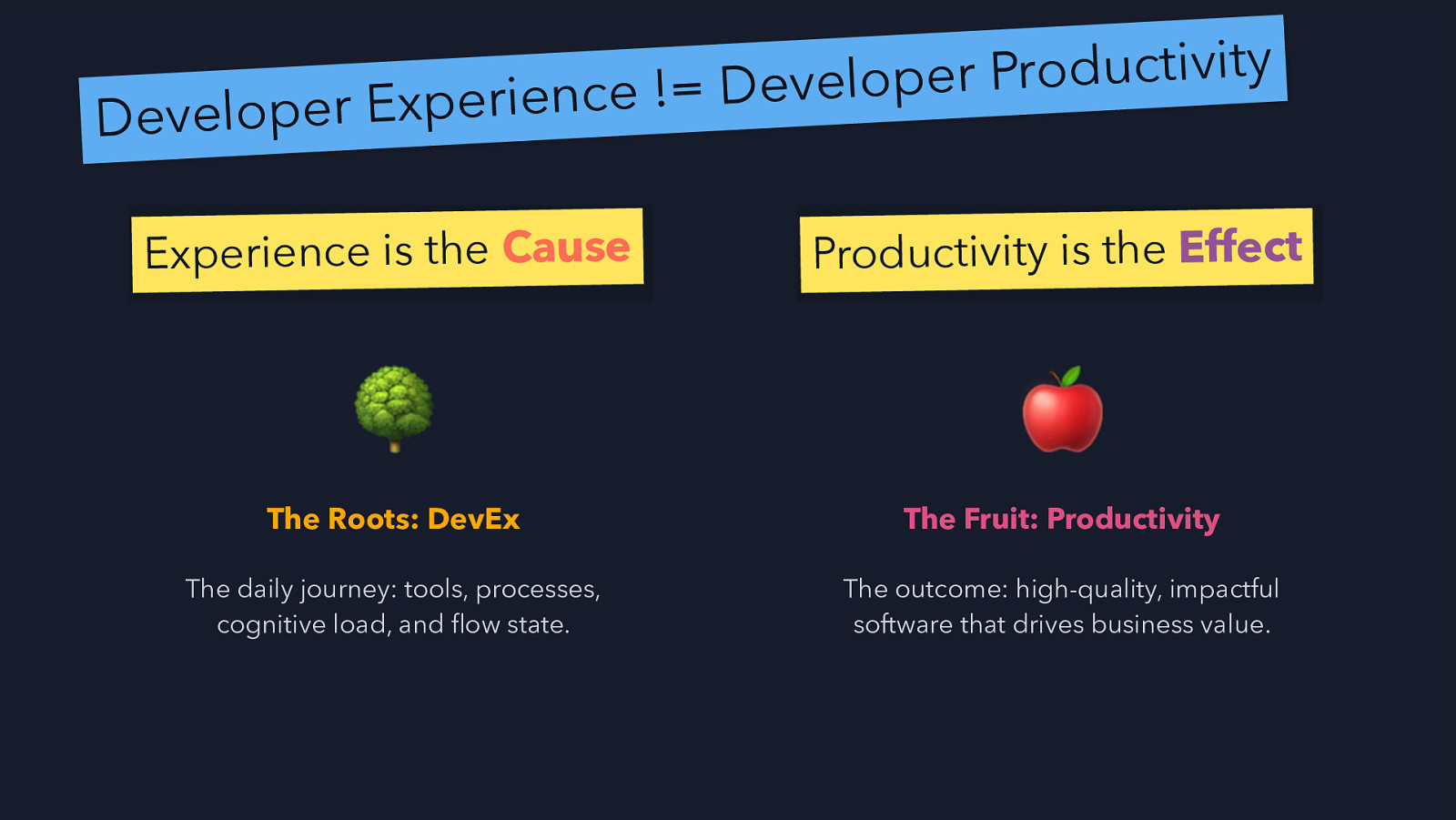

Develo y it v ti c u d ro P r e p lo e v e per Experience != D More than a metric….

Slide 15

y it v ti c u d ro P r e p lo e v e e != D c n ie r e p x E r e p lo e v e D Experience is the Cause Productivity is the Effect The Roots: DevEx The Fruit: Productivity The daily journey: tools, processes, cognitive load, and flow state. The outcome: high-quality, impactful software that drives business value.

Slide 16

DevEx isn’t new REF: F. Fagerholm and J. Münch, “Developer experience: Concept and definition,” 2012 International Conference on Software and System Process (ICSSP), Zurich, Switzerland, 2012.

Slide 17

DevEx isn’t new “New ways of working such as globally distributed development or the integration of self-motivated external developers into software ecosystems will require a better and more comprehensive understanding of developers’ feelings, perceptions, motivations and identification with their tasks in their respective project environments.” REF: F. Fagerholm and J. Münch, “Developer experience: Concept and definition. 2012.”

Slide 18

DevEx isn’t new “…developer experience could be defined as a means for capturing how developers think and feel about their activities within their working environments, with the assumption that an improvement of the developer experience has positive impacts on characteristics such as sustained team and project performance.” REF: F. Fagerholm and J. Münch, “Developer experience: Concept and definition. 2012.”

Slide 19

From Lines of Code to Value Streams !!! 1st 3 Leaders agree that traditional metrics like LOC are ineffective. Principle of modern measurement: Focus on systems, not just individuals. Prominent frameworks now guide the industry: DORA, SPACE, and GetDX Core 4.

Slide 20

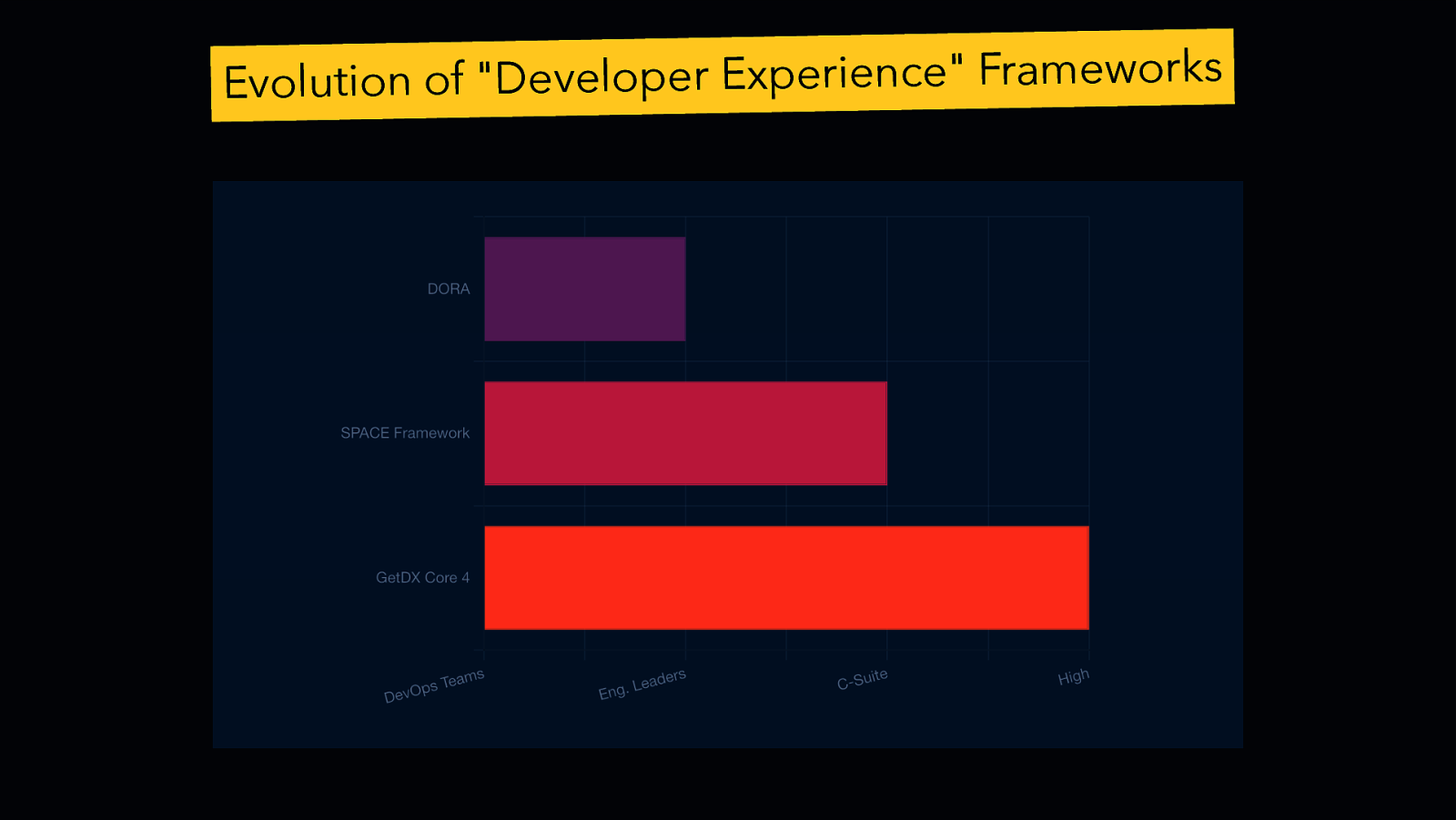

Evolution of “Developer Experience” Frameworks

Slide 21

The Evolutionary Path of Measurement 2014-2018: DORA Metrics Emerge DORA established the gold standard for measuring DevOps pipeline health through rigorous research, priving speed & reliability are not trade-offs… 2021: The SPACE Framework Broadens the Scope SPACE framework introduced a holistic, human-centric model, arguing that productivity is multi-dimensional, emphasized the importance of developer satisfaction, well-being, and collaboration as critical components of performance… 2024: GetDX Core 4 Unifies for Business Impact GetDX Core 4 was created to be a practical, prescriptive framework attempting to unify DORA and SPACE, creating a link between engineering efforts and tangible business outcomes like ROI and revenue.

Slide 22

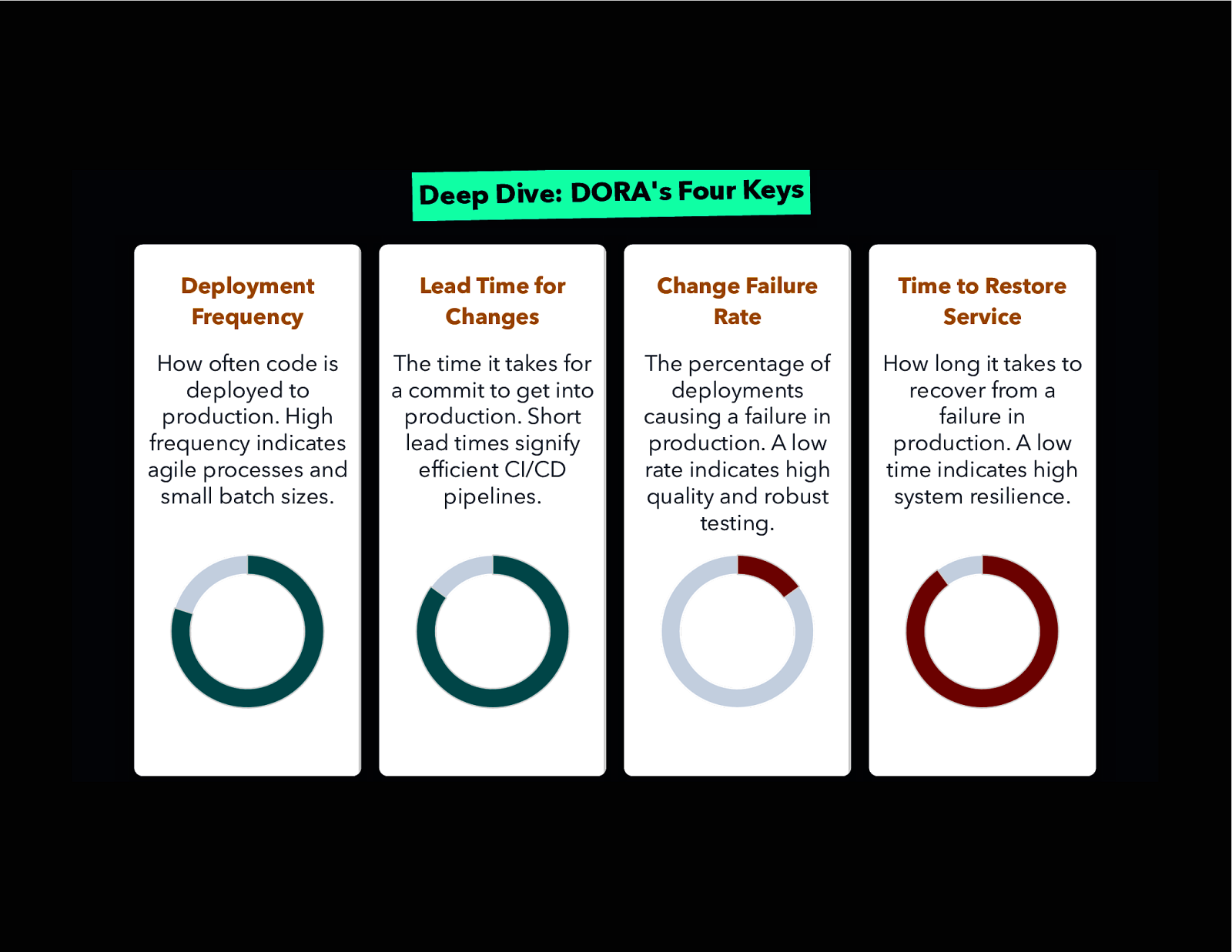

Deep Dive: DORA’s Four Keys Deployment Frequency Lead Time for Changes Change Failure Rate Time to Restore Service How often code is deployed to production. High frequency indicates agile processes and small batch sizes. The time it takes for a commit to get into production. Short lead times signify efficient CI/CD pipelines. The percentage of deployments causing a failure in production. A low rate indicates high quality and robust testing. How long it takes to recover from a failure in production. A low time indicates high system resilience.

Slide 23

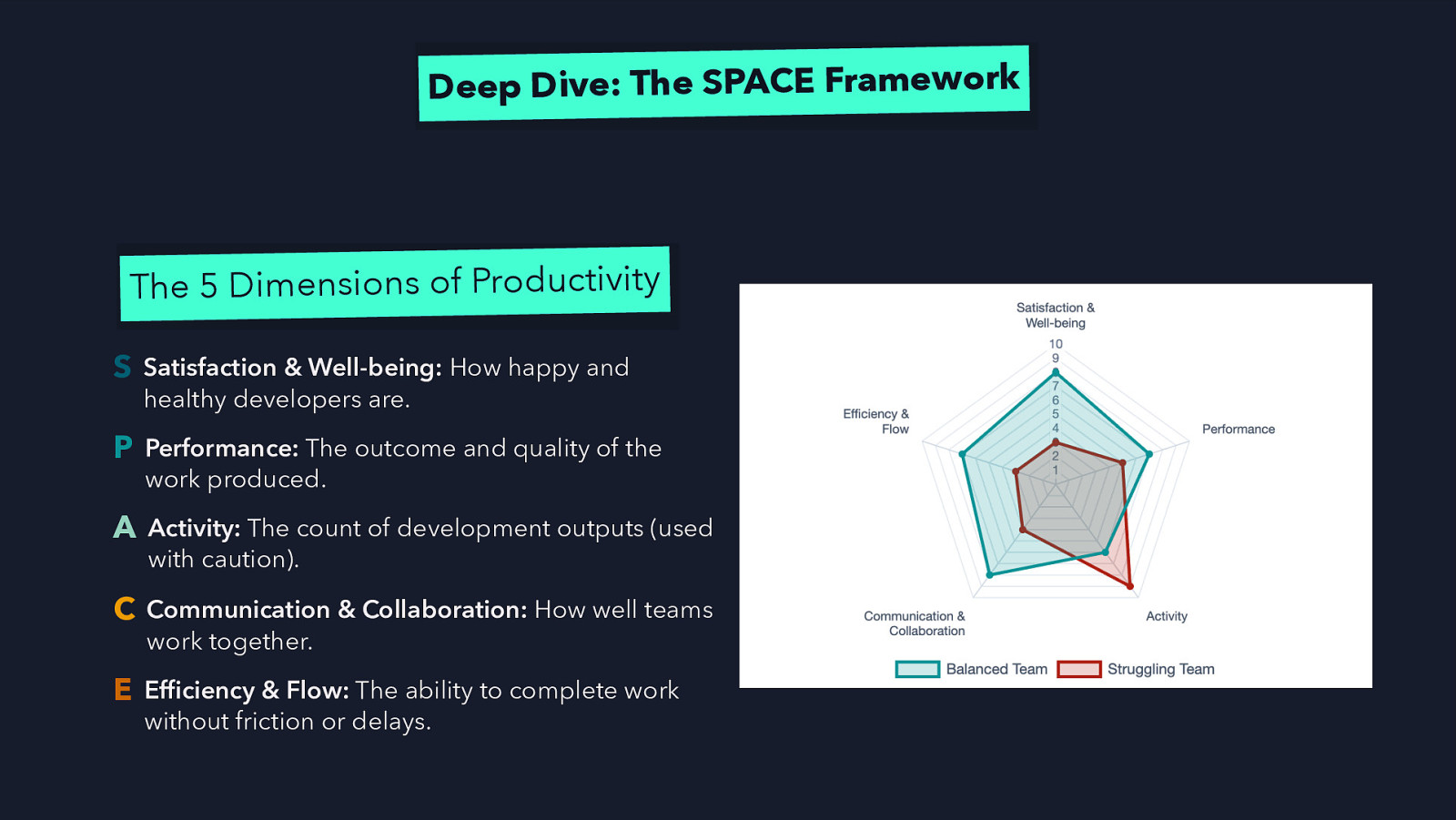

Deep Dive: The SPACE Framework The 5 Dimensions of Productivity S Satisfaction & Well-being: How happy and healthy developers are. P Performance: The outcome and quality of the work produced. A Activity: The count of development outputs (used with caution). C Communication & Collaboration: How well teams work together. E Efficiency & Flow: The ability to complete work without friction or delays.

Slide 24

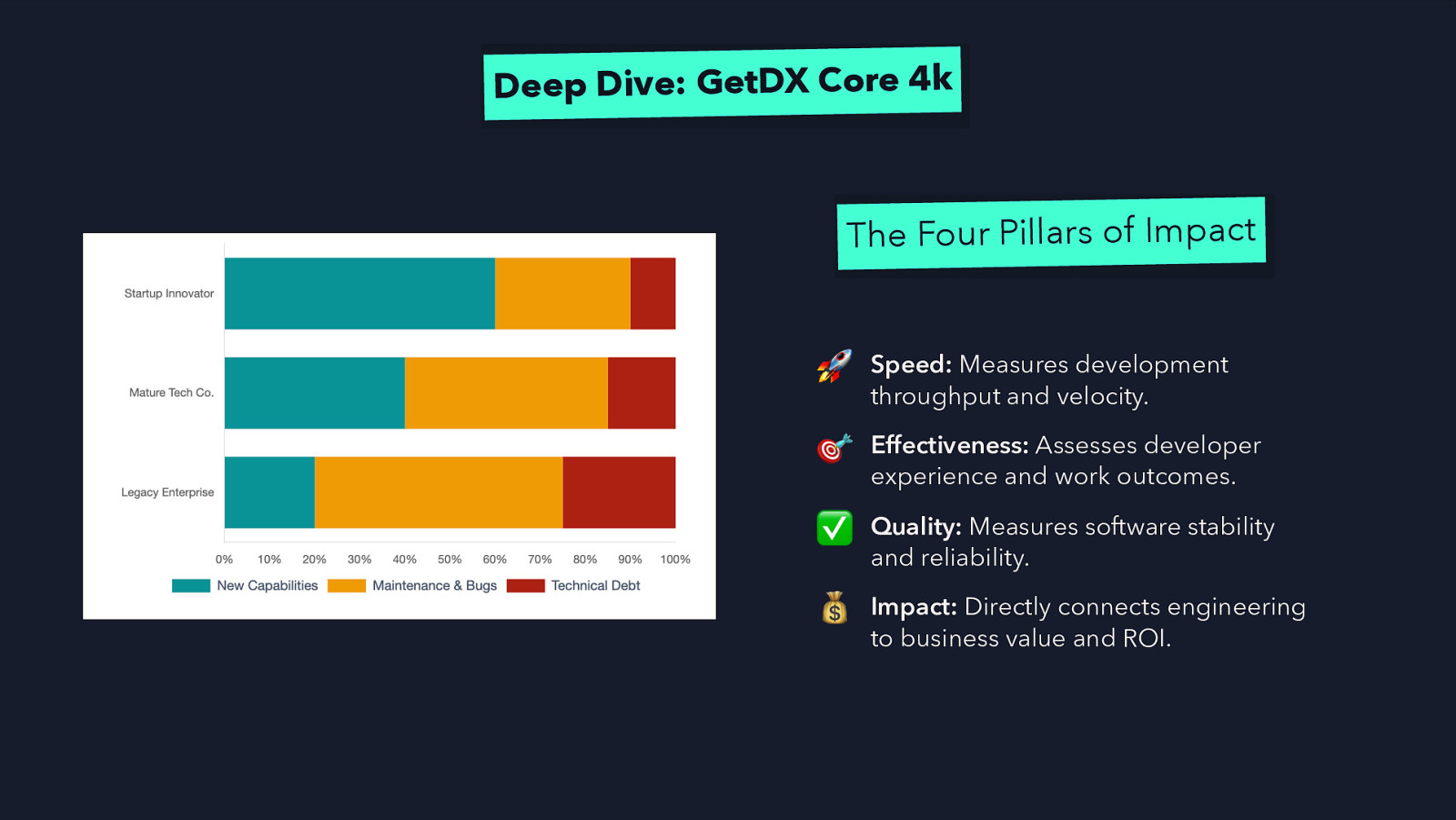

Deep Dive: GetDX Core 4k The Four Pillars of Impact Speed: Measures development throughput and velocity. Effectiveness: Assesses developer experience and work outcomes. Quality: Measures software stability and reliability. Impact: Directly connects engineering to business value and ROI.

Slide 25

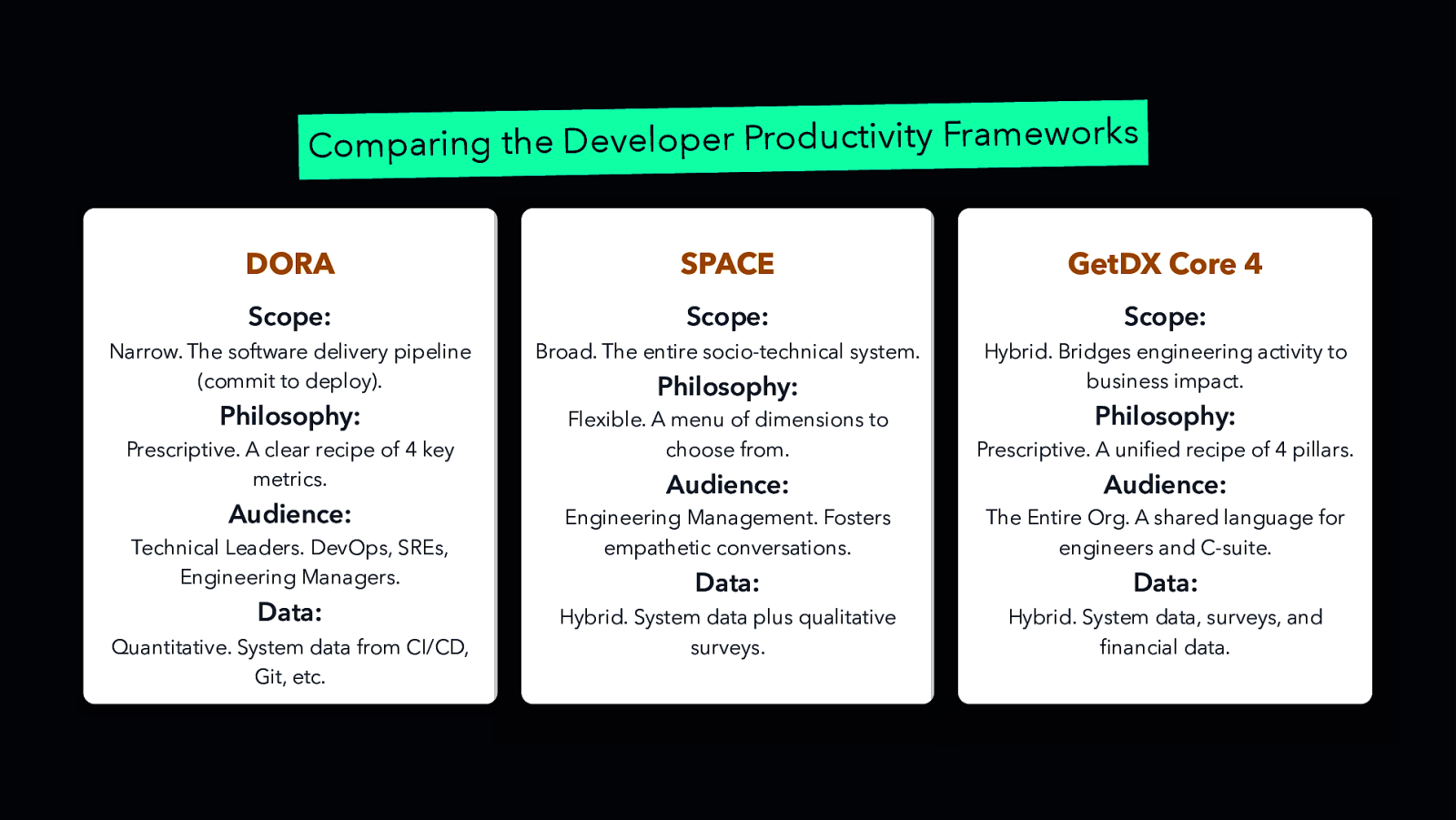

Comparing the Developer Productivity Frameworks DORA SPACE GetDX Core 4 Scope: Scope: Scope: Narrow. The software delivery pipeline (commit to deploy). Broad. The entire socio-technical system. Philosophy: Philosophy: Hybrid. Bridges engineering activity to business impact. Flexible. A menu of dimensions to choose from. Prescriptive. A unified recipe of 4 pillars. Audience: Audience: Engineering Management. Fosters empathetic conversations. The Entire Org. A shared language for engineers and C-suite. Data: Data: Hybrid. System data plus qualitative surveys. Hybrid. System data, surveys, and financial data. Prescriptive. A clear recipe of 4 key metrics. Audience: Technical Leaders. DevOps, SREs, Engineering Managers. Data: Quantitative. System data from CI/CD, Git, etc. Philosophy:

Slide 26

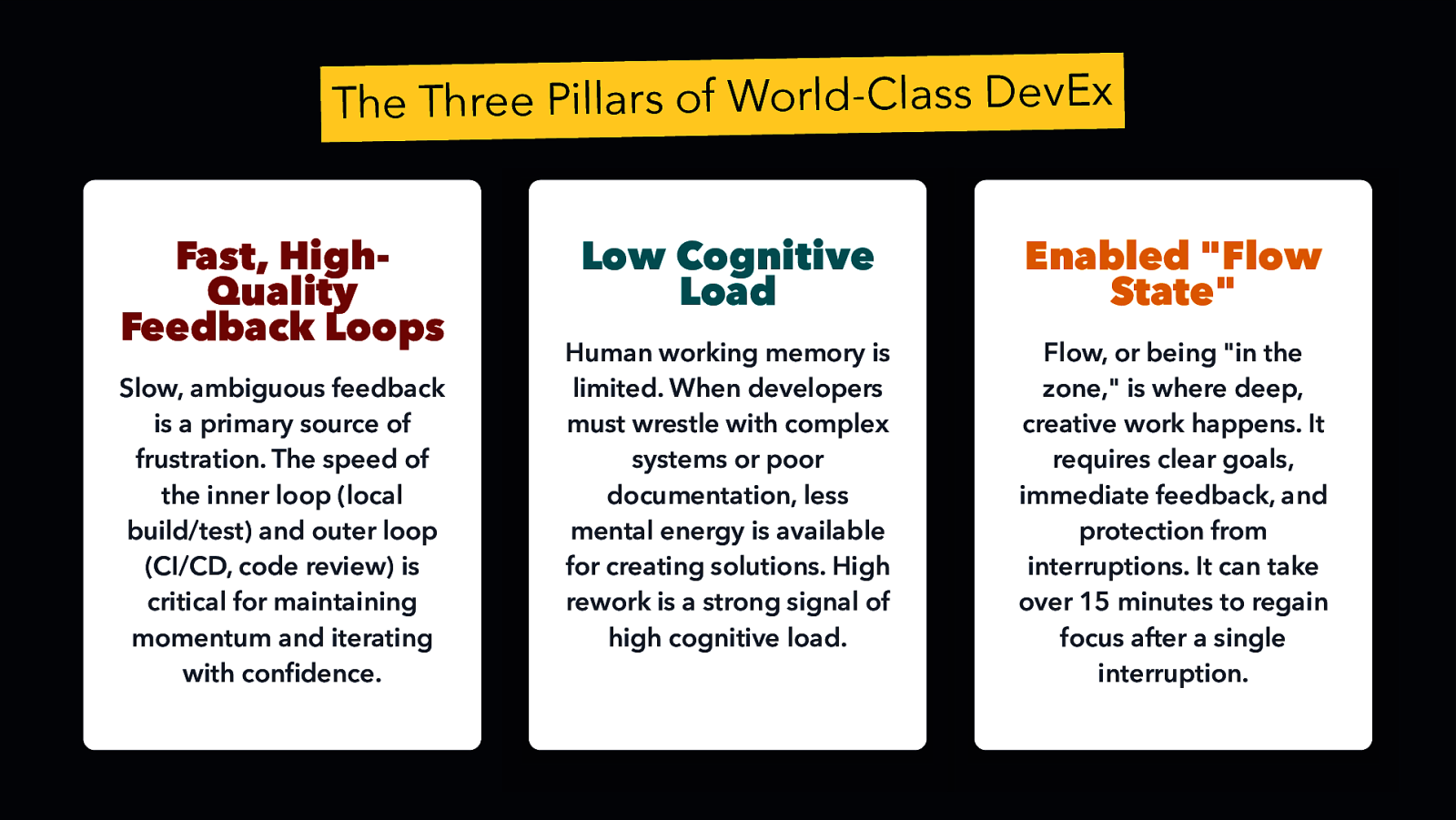

The Three Pillars of World-Class DevEx Fast, HighQuality Feedback Loops Slow, ambiguous feedback is a primary source of frustration. The speed of the inner loop (local build/test) and outer loop (CI/CD, code review) is critical for maintaining momentum and iterating with confidence. Low Cognitive Load Enabled “Flow State” Human working memory is limited. When developers must wrestle with complex systems or poor documentation, less mental energy is available for creating solutions. High rework is a strong signal of high cognitive load. Flow, or being “in the zone,” is where deep, creative work happens. It requires clear goals, immediate feedback, and protection from interruptions. It can take over 15 minutes to regain focus after a single interruption.

Slide 27

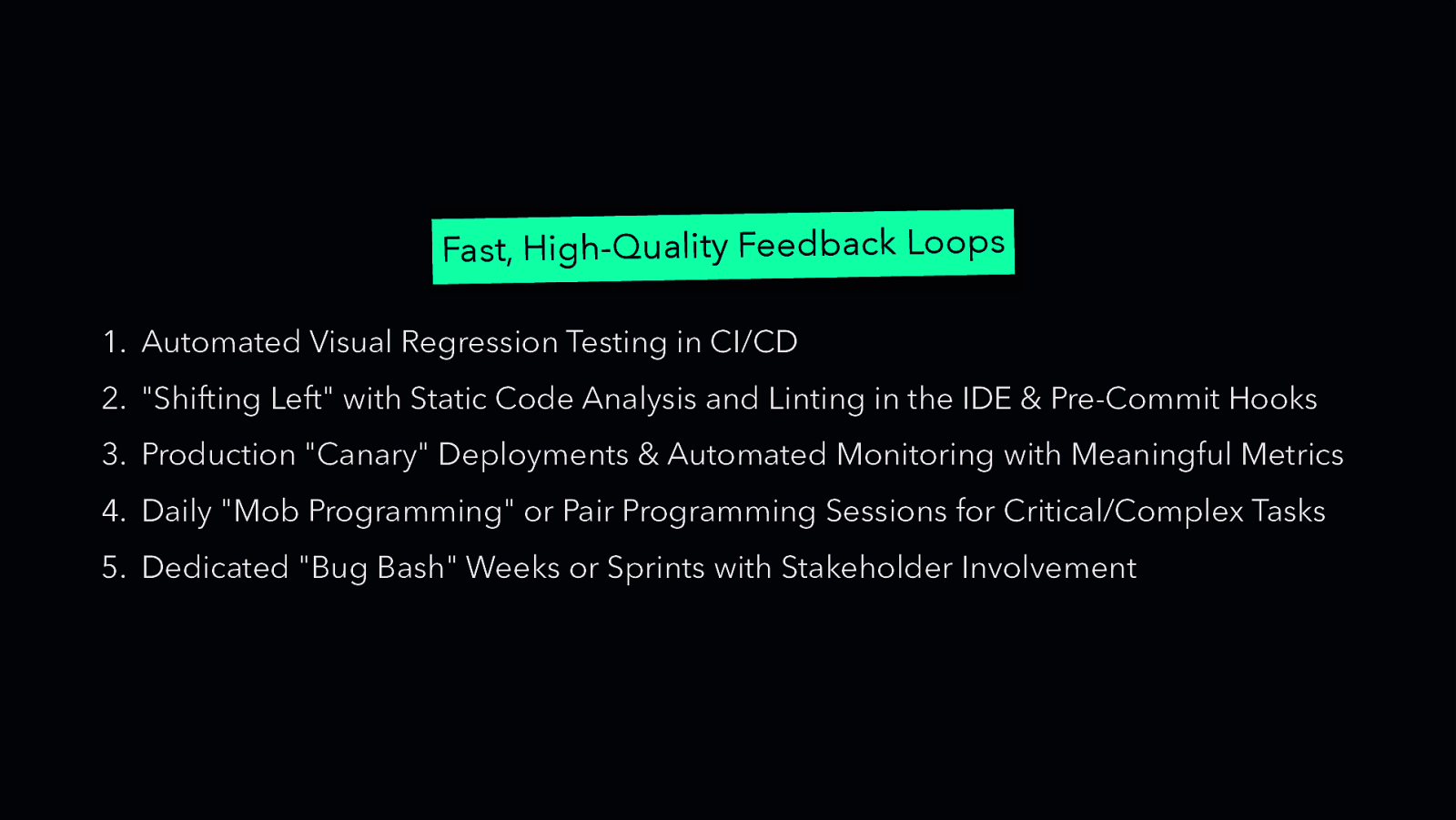

Fast, High-Quality Feedback Loops 1. Automated Visual Regression Testing in CI/CD 2. “Shifting Left” with Static Code Analysis and Linting in the IDE & Pre-Commit Hooks 3. Production “Canary” Deployments & Automated Monitoring with Meaningful Metrics 4. Daily “Mob Programming” or Pair Programming Sessions for Critical/Complex Tasks 5. Dedicated “Bug Bash” Weeks or Sprints with Stakeholder Involvement

Slide 28

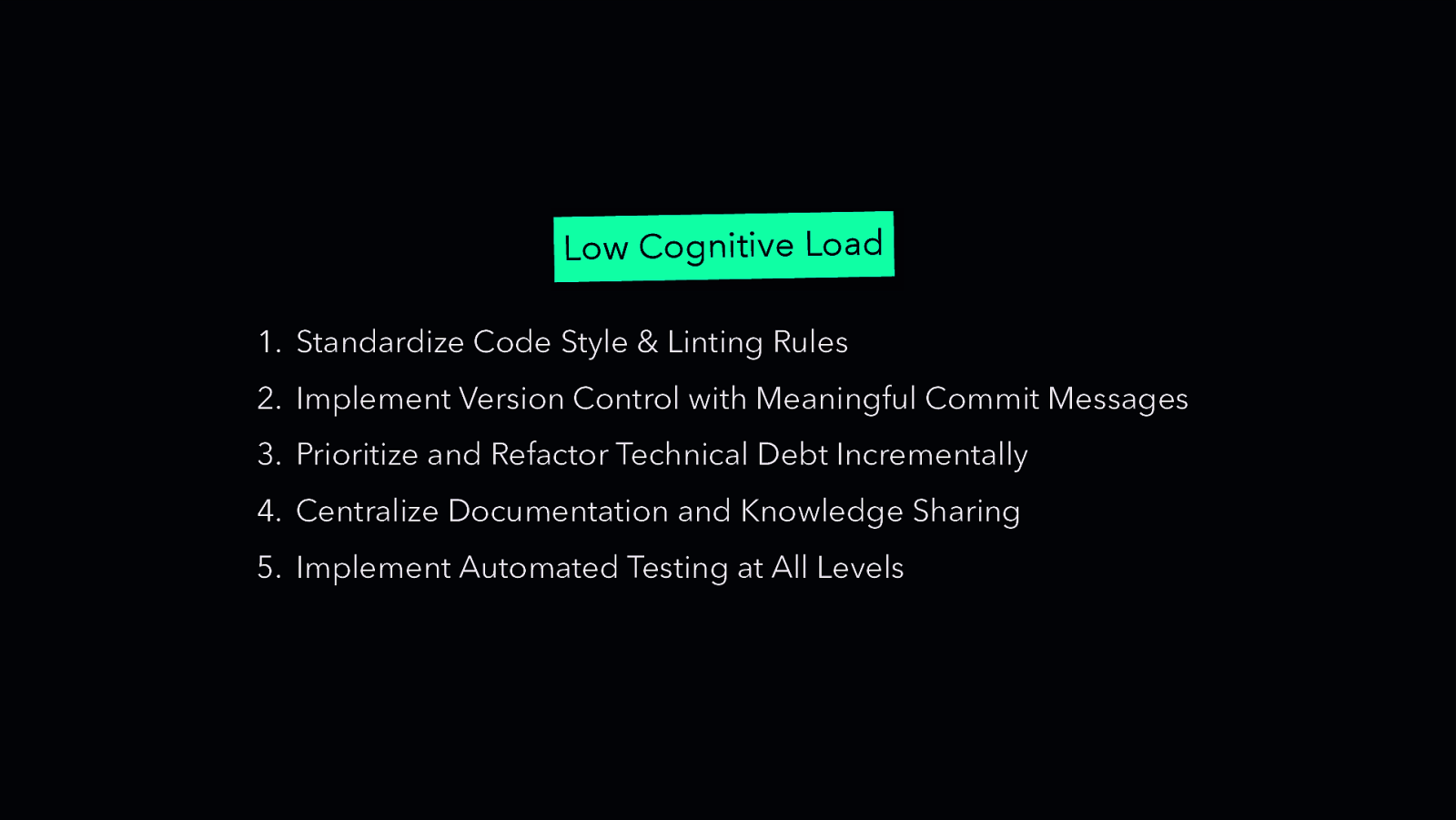

Low Cognitive Load 1. Standardize Code Style & Linting Rules 2. Implement Version Control with Meaningful Commit Messages 3. Prioritize and Refactor Technical Debt Incrementally 4. Centralize Documentation and Knowledge Sharing 5. Implement Automated Testing at All Levels

Slide 29

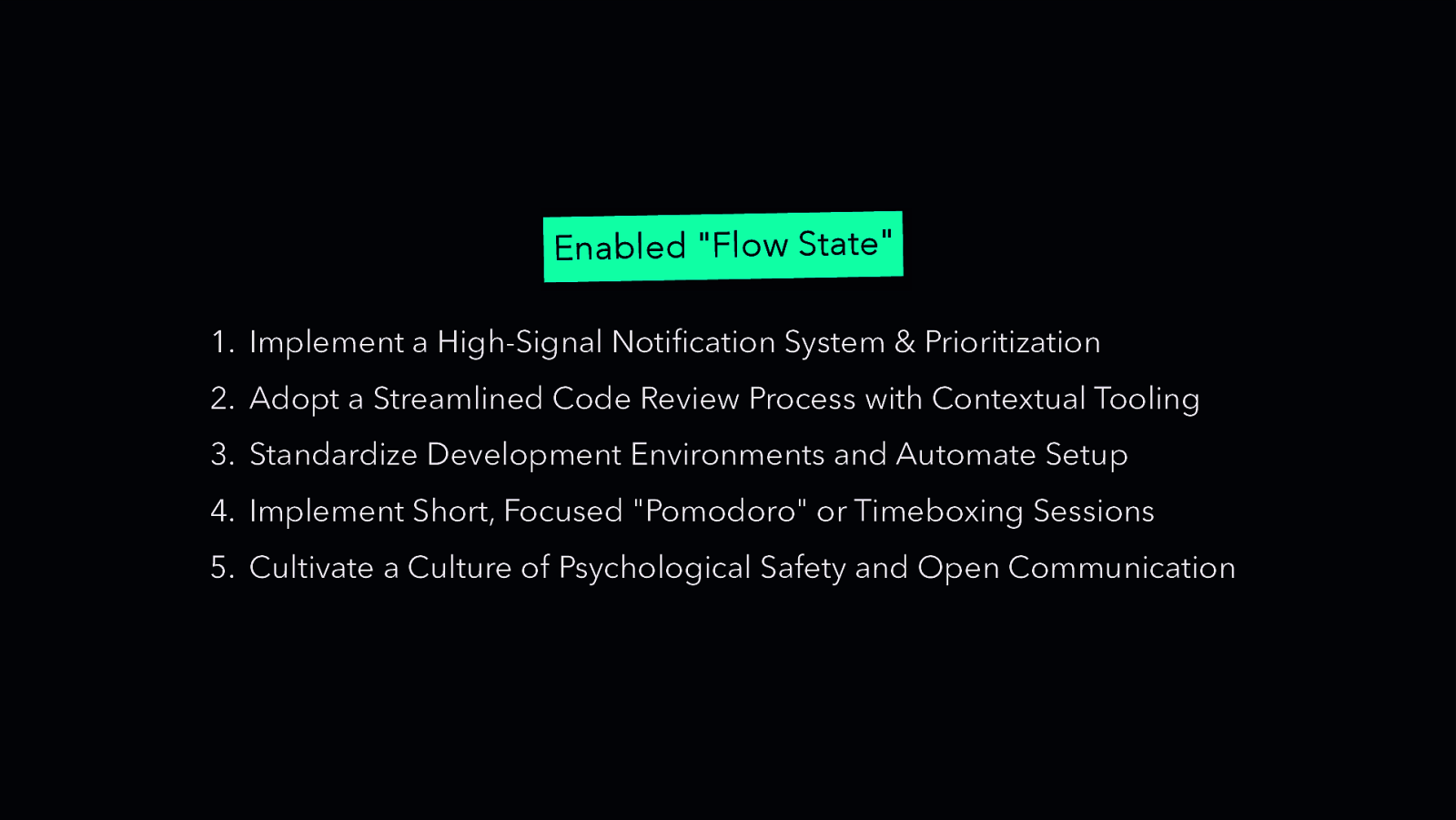

Enabled “Flow State” 1. Implement a High-Signal Notification System & Prioritization 2. Adopt a Streamlined Code Review Process with Contextual Tooling 3. Standardize Development Environments and Automate Setup 4. Implement Short, Focused “Pomodoro” or Timeboxing Sessions 5. Cultivate a Culture of Psychological Safety and Open Communication

Slide 30

Avoid the Gamification Trap Use Metrics for Improvement, Not Judgment “when a measure becomes a target, it ceases to be a good measure.” Goodhart’s Law

Slide 31

So what do we measure? Cycle Time PR Review Time Rework Rate Meeting Load Time to First Commit Perceived Focus Time

Slide 32

Cycle Time Implement and enforce “Small Batch” Size Approach Optimize Code Review Process with Developer-Focused Tooling and Practices Invest in Test Automation and CI/CD Pipelines Improve Development Environment Setup and Standardization Proactively Identify and Remove Blocking Issues and Dependencies

Slide 33

PR Review Time Enforce “Small PR” Guidelines and Automation Implement a Reviewer Rotation and/or “Reviewer Roulette” System Mandate Clear and Concise PR Descriptions and Context Establish Service Level Agreements (SLAs) for PR Reviews and Make Them Visible Invest in Automated Code Analysis and CI/CD Integration

Slide 34

Rework Rate Implement Comprehensive Code Reviews with a Focused Checklist Refine User Stories with Clearer Acceptance Criteria and Examples Invest in Better Tooling and Automation for Testing Implement a Robust Definition of Done (DoD) and Enforce It Improve Feedback Loops and Communication

Slide 35

Meeting Load Implement a “Meeting-Free Day” (or Half-Day) Policy Audit Meeting Invitations and Participation Standardize Meeting Agendas and Timeboxing Promote Asynchronous Communication Tools & Practices Implement a “Meeting Budget” or “Meeting Credit” System

Slide 36

Time to First Commit Provide Ready-to-Run Starter Projects/Templates Automate Environment Setup and Onboarding Simplify Code Contribution Process with Clear Guidelines and Tooling Offer Short, Focused “First Contribution” Tasks (aka “Good First Issues”) Provide Active Mentorship and Support (paired with tooling)

Slide 37

Perceived Focus Time Optimize Build Times with Incremental Builds and Caching Implement a “Quiet Period” During Deployments/Integrations Prioritize and Reduce Notification Overload Automate Repetitive Tasks with Scripting or Tools Improve Error Messaging and Debugging Tools

Slide 38

Slide 39

Build a Healthy Measurement Culture No framework is a silver bullet Continuous improvement, not judgment Communicate the ‘why’ Involve your team Focus on trends, not absolutes Combine quantitative data with qualitative human insights

Slide 40

Conclusion

Slide 41

DevEx is… “ruthlessly eliminating barriers (and blockers) that keep your practitioners from being successful”

Slide 42

@jerdog.dev /in/jeremymeiss Thank you! @jerdog @jerdog@hachyderm.io jmeiss.me @IAmJerdog

Slide 43

END